Wikimedia Conference/2016 report

This page contains the report of the Wikimedia Conference 2016 in Berlin.

During the conference the Friendly space policy was in action. For the movement it is important as well to practise the friendly space expectations, as only this makes it possible to collaborate effectively.

Wednesday

[edit]Wednesday was the first day of the pre-conference learning day of the conference.

Outcome Mapping

[edit]- Title: Capturing Social Change Through Outcome Mapping

- Program: Outcome Mapping

- Presentation: Capturing Social Change Through Outcome Mapping.pdf

- Why Outcome Mapping?

- It allows for qualitative outcomes and stories of our projects and programs and helps to better surface our shared impact.

- It provides useful framework for getting beyond our direct outputs and products. Identifying intermediate qualitative outcomes of influence and higher-level system change.

- Our projects seek deeper changes and impact on the world than may be directly linked to our own immediate project environments.

- Mapping Desired Changes

- 1st kind: The changes we EXPECT to see = These changes are those which we have most direct connection or control of

- 2nd kind: The changes we HOPE to see = These changes require that one's direct project work is effective at leading to other changes

- 3rd kind: The changes we LOVE top see = These changes are those that change the environment to get at the one's vision

Survey Questions

[edit]- Title: 8 Tips for Designing Effective Surveys

- Program: Survey Questions

- Presentation: missing

- A survey is most useful when you...

- Have a specific goal

- You have resources to act on survey results

- Know your audience & know how to reach your audience

- Have the support (question design, analysis)

- A survey is not useful when you...

- Are exploring a brand new topic (try interview/focus groups instead)

- Don't have support if you don't have experience (find a mentor!)

- Don't know your audience or don't know how to reach them

- Have a small or simple project; interviews or collaboration might be better

- Always start with survey goals

- What is the overall project a survey will support?

- How will data from a survey support this goal?

- Develop 1 to 3 specific goals for your survey

- Develop questions for each goal

- Surveys measure self-report

- Self-reported data:

- Attributes: demographic data like occupation, gender

- Attitudes: opinions about open culture

- Behaviours: past or current activities

- Knowledge: awareness of WikiProjects, learning concepts

- Surveys don't measure facts; everything is self reported

- Surveys measure future activities poorly, but you can measure current interest

- Self-reported data:

- Have specific, concise questions

- Poor: Did you attend the March edit-a-thon series regularly -> means something different for multiple people

- Better: Which, if any, of the edit-a-thon series in March did you participate in? Choose all that apply

- Time is often missing; make sure to include a specific time frame

- Don't get too wordy. get to the point quickly

- Avoid double-barreled questions

- Poor: Have you edited Wikipedia and Commons before this workshop?

- Better:

- Have you ever edited Wikipedia?

- Have you ever uploaded a photo to Wikimedia Commons?

- Have you ever edited Wikimedia Commons?

- Watch out for the words: "and", "or"

- Don't double barrel responses either!

- Avoid jargon or abbreviations - write out or explain them

- Poor: To what extent are you satisfied with using Visual Editor?

- Better: To what extent are you satisfied with using Visual Editor, (e.g. the new tool that allows you to edit an article directly)?

- If you run into a concept, it is better to explain it with different words

- Ask neutral and fair questions

- Poor: Don't you agree that laptops should be available for use at every editing workshop?

- Better: To what extent do you agree or disagree that laptops should be available to use at every editing workshop?

- Use both positive and negative adjectives (e.g. satisfied/dissatisfied, difficult/easy) in the question

- "To what extent" helps you to stay balanced

- Think of questions as 2 parts

- Example: Question: How many hours, if any, do you plan to edit Wikipedia next week?

- Example: Response options:

- None

- Less than 2 hours

- 2 to 5 hours

- 6 to 10 hours

- 11 or more hours

- The other half of writing a question is choosing a good response option!

- Response options are crucial

- Example: Which of the following activities did you spend the most time during the Edit-a-thon?

- Poor:

- I edited any project

- I uploaded an image to Commons

- I was creating a talk page

- I was not able to attend

- Better:

- I edited Wikipedia

- I uploaded an image to Commons

- I created a talk page

- Other

- Poor:

- Other (specify) and Not applicable, are very helpful

- Use same tenses for the answers, and not "uploaded" + "was creating" together

- Example: Which of the following activities did you spend the most time during the Edit-a-thon?

- Quick review of response types

- Structured (fixed response)

- Pairs

- Multiple Choice

- Check all that apply

- Ranking

- Scales

- Non-structured (open-ended)

- Fill in blank

- Open-ended: "What is your opinion of X?"

- Structured (fixed response)

- Resources: Survey Support Desk

- for WMF staff, Wikimedia affiliates, and volunteers

- What is in the Survey Support Desk?

- Strategy, management, and communications

- Reaching people with surveys

- Writing and designing questions

- Privacy and data

- Analysing and sharing survey results

- Trying to find someone with survey experience?

- Use Category:Survey skills on Meta to find people who have done surveys

- Email Edward Galvez at surveys

wikimedia.org for consulting support or access to qualtrics

wikimedia.org for consulting support or access to qualtrics

SMART targets

[edit]- Program: SMART targets

- Presentation: missing

SMART stands for Specific, Measurable, Assignable, Realistic, and Time-related. It means that any objective you aim for, all five of these criteria are included. According to SMART, each of the five mean:

- Specific – target a specific area for improvement.

- Measurable – quantify or at least suggest an indicator of progress.

- Assignable – specify who will do it.

- Realistic – state what results can realistically be achieved, given available resources.

- Time-related – specify when the result(s) can be achieved.

Logic models

[edit]

- Program: Logic models

- Presentation: missing

A logic model is a tool used by funders, managers, and evaluators of programs to evaluate the effectiveness of a program. In the model not only the direct output is defined, but also the indirect outcomes and impact a project has.

On Grants:Evaluation/Logic models is a detailed description of how to use a logic model.

An on wiki example of a logic model is the funny Behind the Scenes of the Wikimedia Conference 2016.

See also: Impact working session for the session in what we created logic models.

Qualitative Data

[edit]- Program: Qualitative Data

- Presentation: missing

- Title: Quick & Dirty Qualitative Analysis - Basic & Advanced Word Clouds

- When to use:

- when you want to show why you make a choice based on qualitative data

- to show statistics visualised

- to show responses from a survey visualised

- To make a basic Word Cloud with your raw qualitative data:

- Go to http://www.wordle.net/

- Click "Create your own."

- Copy and paste all your text into the text box OR if your data is a webpage, like a blog or twitter feed, you can enter the URL

- Use the menu options to adjust color scheme, font, and layout (and save Word Cloud as PNG image file)

- To make Advanced Word Clouds in 3 easy steps

- Data Cleaning

- Remove any irrelevant text from your responses/text (like references to subjects not relevant to the core subject)

- Correct any misspelled words

- Thematic Coding

- Categorisation techniques to help organise related responses

- Code each suggestion/response to categorical theme(s) - like in topics (like column A: responses on each row, in column B topic 1 summarised, in column C topic 2 summarised, in Column D topic 3 summarised, ... - the more subjects in cell A, the more columns)

- List all the topics, merge all identical ones with behind each topic a

:and number of times a topic was listed, example:- Education program:7

- GLAM:5

- Edit-a-thons:4

- The space is seen here as weight, the number is the weight of a topic in the Word Cloud.

- Code for Directionality

- Associate meaning to ambiguous themes/topics/response terms by:

- Adding a second layer of coding for type of support to be able to interpret themes in that context

- Coding attitude data for valence, categorising comments as positive, negative, neutral and separating Word Clouds presentations for each category

- Associate meaning to ambiguous themes/topics/response terms by:

- Data Cleaning

- To make an Advanced Word Cloud with your coded data:

- Go to http://www.wordle.net/

- Click "Advanced" from the menu tabs

- Copy and paste your consolidated list, formatted as list as [topic label]:[Number of Observations] like:

Education program:7 - Now we have an advanced word cloud in which our combined word topic labels are weighted by size to illustrate observation frequency

Data Presentation

[edit]- Program: Data Presentation

- Presentation: WMCON 2016 - Learning Day - Tools rotation Data Presentation.pdf

- Title: Data Presentation

Examples of infographics (worse and better ones)

- US Space Travel

- ...

- Space Race

- Distracting colours: U.S. healthcare spending

- Minard - one of the oldest infographics - Charles Minard's 1869 chart showing the number of men in Napoleon’s 1812 Russian campaign army, their movements, as well as the temperature they encountered on the return path

- 3D aspect is not working - West Nile Virus

- Not homogeneous: Advanced Science Course

- Time spent on weekends

- Distracting background: Bananas

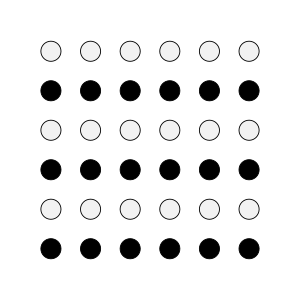

Gestalt Principles of Visual Perception

Gestalt psychology was conceived in the Berlin School of Experimental Psychology, and tries to understand the laws of our ability to acquire and maintain meaningful perceptions.

- (German: Gestalt [ɡəˈʃtalt] "shape, form")

Key principle: when the human mind peceives a form, the whole has a reality of its own, independent of the parts.

This allowed the development of 8 Gestalt Laws of Grouping. Here we are highlighting only the most relevant 5 for data representation. You can read more details about them on Wikipedia: Gestalt psychology

- Proximity. We tend to see objects that are visually close together as belonging to part of a group.

- Similarity. Objects that are similar in shape and color as belonging to part of a group.

- Closure. We tend to perceive objects such as shapes, letters, pictures, etc., as being whole when they are not complete. Specifically, when parts of a whole picture are missing, our perception fills in the visual gap.

- Continuity. Elements of objects tend to be grouped together, and therefore integrated into perceptual wholes if they are aligned within an object. In cases where there is an intersection between objects, individuals tend to perceive the two objects as two single uninterrupted entities.

- Law of good Gestalt. Elements of objects tend to be perceptually grouped together if they form a pattern that is regular, simple, and orderly. This law implies that as individuals perceive the world, they eliminate complexity and unfamiliarity so they can observe a reality in its most simplistic form. Eliminating extraneous stimuli helps the mind create meaning.

This meaning created by perception implies a global regularity, which often mentally prioritised over spatial relations. The law of good gestalt focuses on the idea of conciseness, which is what all of gestalt theory is based on.

General advise: leaving things out makes it more clear.

Thursday

[edit]Thursday was the second day of the pre-conference learning day of the conference.

Magic Button

[edit]- Program: Magic Button

- Title: Global Metrics Magic Button

How to use the Global Metrics Magic Button.

- Instructions: How to use the Global Metrics Magic Button

- Tool: https://metrics.wmflabs.org/reports/program-global-metrics

- Notes

- It can take a while sometimes to load, even 5 minutes or so. Follow then the course of having patience in the mean time. ;-)

- Only article namespace (ns:0), and not the sandbox in the user namespace

Dashboard

[edit]- Program: Dashboard

Notes:

- The dashboard is a project management tool to do class assignments

- Only article namespace (ns:0)

- There is a tab for Commons

- Dashboard is still in development!

Wikimetrics

[edit]

Start:

- Be logged in in tool

- Go to: https://metrics.wmflabs.org/cohorts/upload

- Fill in

- Name -> must be unique, and used to identify/specify the metrics report

- Default project -> database name of project example: English Wikipedia = enwiki, English Wikibooks = enwikibooks

- Paste User Names -> only the user name, without prefix User:, one user name on each row

- CentralAuth -> check this box, unless you want to do only one wiki

On My cohorts your cohort is linked. There you can create a report with the metrics of that cohort.

Notes:

- Not need to be public.

Social media

[edit]- Program: Understanding Social Media

- Presentation: Wikimedia Conference 2016 - Understanding Social Media.pdf

- Title: Understanding Social Media

- Social media platforms are tools.

- For example for: You can use it for asking a question and capture those answers for your use.

- Why using?

- Most people on Twitter use Wikipedia every day.

- On some social media platforms there are communities with more than half are women.

- We as Wikimedians need to share our projects so that people get the chance to know them.

- To reach out to the world, so the world can use Wikipedia/Wikimedia.

- Jeff Elder does the social media for WMF.

- To send ideas/text to submit on social media, use: social-media@lists.wikimedia.org

- There is a closed Facebook group for Wikimedia affiliates, WMF, etc.

- Which social media is used?

- What do we do on social media?

- Crowd-pleasing content

- Explanations of the Wikimedia movement

- How do we NOT use social media?

- Do not endorse any products or political candidates

- Do not engage in any dispute with other accounts

- Do not post sarcasm, "snarky" or unfriendly tones

- Often not understood, nor people have the same humour, and we do not want negative publicity.

- Do not retweet or repost anything that contains any of the above

- Do not post media that is not either owned or co-owned by the Wikimedia Foundation (such as photos we take), in the public domain or licensed under CC0

- Files licensed under CC BY-SA (and others) is NOT compatible with Facebook. Therefore WMF does not use them on any social media.

- Remember the "five pillars" of Wikipedia's fundamental principles

- Visit: Social media

- How can we help you?

- We can reshare your posts when they have broad appeal.

- You can reshare our posts when you need content.

- We can offer guidance or advice on managing your accounts.

- We can help you connect with social media companies and other social media people in the movement.

- We can support your events via #hashtags and mentions.

Education

[edit]- Program: Education Program & Resources

- Presentation: missing

How are we active in education?

- Classroom activities

- Adding sound recordings

- Translating

- Formal extracurricular activities

- Wikicamps and wikiclubs

- Government partnerships

Add your activities to outreach:Education/Historic data to be included in the table.

Friday

[edit]Impact working session

[edit]- Program: Impact Working Session

- Photos: 12 Impact Working Session

During this session we created logic models for various of our projects.

- The first step was to think of a program, activity or project. Those were written down and added to the board, grouped by theme.

- The next step for each of us was to create a logic model of the chosen subject.

- When this was completed, the logic model was explained to a peer, and the model refined. And roles switched.

- Then the logic model of the peer was presented, and placed on the board. On the right side was the direct goal of the project, and the more to the left the less direct impact the outcomes of a subject has on the goal of the movement.

-

Step 1

-

Step 4

Saturday

[edit]Metrics of WMUK & WMFR

[edit]- Program: Create your own metrics: examples from Wikimedia France and Wikimedia UK

- Presentation: missing

WMUK

Volunteering doesn't fit into fixed boxes, but we have to start somewhere. Key areas:

- Volunteer hours

- 'lead' level

- Gender (+ 'lead')

- Chapter satisfaction

- Skills development

Advocacy: Challenge of the long term effects - look for something quicker

Indicators Total audience and reach

- Number of active editors involved = GM 1

- Number of newly registered editors = GM 2

- Number of individuals involved = GM 3

- Number of leading volunteers

- Percentage of above who are women

- Number of volunteer hours

- Volunteer hours attributable to women

- Volunteers would recommend WMUK

- Volunteers feel valued by WMUK

- Volunteers have developed new skills

- Images/media added to Commons = GM 4b

- Images/media added to Wikimedia article pages = GM 4a

- % uploaded media used in article pages

- Files with featured status

- Articles added and/or improved = GM 5

- Articles added

- Bytes added and/or deleted = GM 6

- Number of social media followers

- Number of partnerships developed

- Responses to consultations

- Evidence taken into consideration

(GM = global metric)

WMFR

What are they?

- Press mentions - number of mentions in written, radio, tv, etc)

- Files supported by the association - number of files uploaded with the help of equipment loan, contests, edit-a-thons, etc

- Partners satisfaction - once a year we send out a survey to our partners and asked how they evaluate and value the partnership

- Qualtrics survey

- Are you satisfied about the partnership?

- Would you like to be our parter again?

- In your opinion, goals have they been achieved?

- Would you recommend Wikimedia France to another organisation?

- From 0 to 10, could you rate the partnership?

- ...

- Qualtrics survey

- Volunteer hours

- Like: Edit-a-thon; Transport; Lunch with participants; Online tutoring; Preparation of slides

- Conversion grid to match the actions into hours

- After each action project leader can evaluate volunteer hours

- Useful to gather all hours

- Hours are valued at the legal minimum wage

Sunday

[edit]Global Metrics

[edit]- Program: Global Metrics Retrospective and Revision

- Presentation: Global Metrics Review and Retrospective - Jaime Anstee and Sati Houston.pdf

Global Metrics are needed to ensure that we know what is going in the movement, including all individual projects, and that we all talk about the same metrics (where possible). This should make sure:

- Give a high-level view of grant-related outcomes.

- Ensure accountability.

- Balance the need for data with the burden to volunteers.

Spectrum of success in using the metric

- Global Metrics is useful in Understanding outcomes

- Global Metrics is sometimes useful in Planning and refining goals & activities

- Global Metrics is useful in limited cases in Making comparisons between grants

Issues with getting the global metrics are grouped in three areas:

- How metrics are defined

- Inconsistent definitions

- Aggregation of data

- How the data is collected

- Tools, included Wikimetrics

- Burden of collecting data

- Attribution of outcomes

- Outcomes that are missing

- Retention

- Capturing different types of contribution

- Partnerships

Aggregation of data leads to a loss of context and dilutes the value of the aggregated data.

More information about the global metrics at: Grants:Learning & Evaluation/Global metrics

The 7 specific global metrics are here: The metrics

Besides global metrics you can also have other metrics that you consider relevant for the project.

See also

[edit]- Other presentations